Initial enthusiasm from promising benchmarks and demonstrations is overshadowed by subsequent criticism.

Google’s introduction of Gemini, an AI model designed to narrow the gap between the tech giant and OpenAI, initially generated a positive reception. The presentation featured robust benchmarks, a captivating video demonstration, and immediate availability (albeit for a scaled-down version), all indicating a sense of confidence.

However, the initial optimism waned as AI experts and enthusiasts scrutinized the specifics and identified shortcomings. While Gemini holds promise and could potentially challenge GPT-4’s dominance in the future, Google’s unclear communication strategy has forced it into a defensive position.

Emma Matthies, the lead AI engineer at a prominent North American retailer (speaking for herself and not on behalf of her employer), expressed skepticism: “There are more questions than answers. I noticed a discontinuity between the way [Google’s Gemini video demo] was presented and the actual details provided in Google’s technical blog.”

The showcased presentation is titled “Hands-on with Gemini” and was released on YouTube concurrently with the unveiling of Gemini. It maintains a brisk pace, a friendly tone, and an entertaining atmosphere, featuring easily understandable visual examples. However, it tends to exaggerate the functionality of Gemini.

According to a Google representative, the demo “displays real prompts and outputs from Gemini.” Despite this assurance, the video editing overlooks certain details. The interaction with Gemini transpired through text, not voice, and the visual challenges the AI addressed were presented as images, not through a live video feed. Furthermore, Google’s blog mentions prompts that were not included in the demo. For instance, when Gemini was tasked with recognizing a game of rock, paper, scissors based on hand gestures, it received the hint “it’s a game,” a detail omitted in the demo.

But that’s only the beginning of the challenges facing Google. AI developers soon recognized that Gemini’s capabilities were not as groundbreaking as they initially seemed.

“If you examine the capabilities of GPT-4 Vision and create the appropriate interface for it, it’s comparable to Gemini,” says Matthies. “I’ve undertaken similar projects as side endeavors, and there are experiments on social media, like the ‘David Attenborough is narrating my life’ video, which was exceptionally amusing.”

On December 11, merely five days following the introduction of Gemini, an AI developer named Greg Sadetsky promptly replicated the Gemini demo using GPT-4 Vision. He proceeded to conduct a direct comparison between Gemini and GPT-4 Vision, and the results did not favor Google.

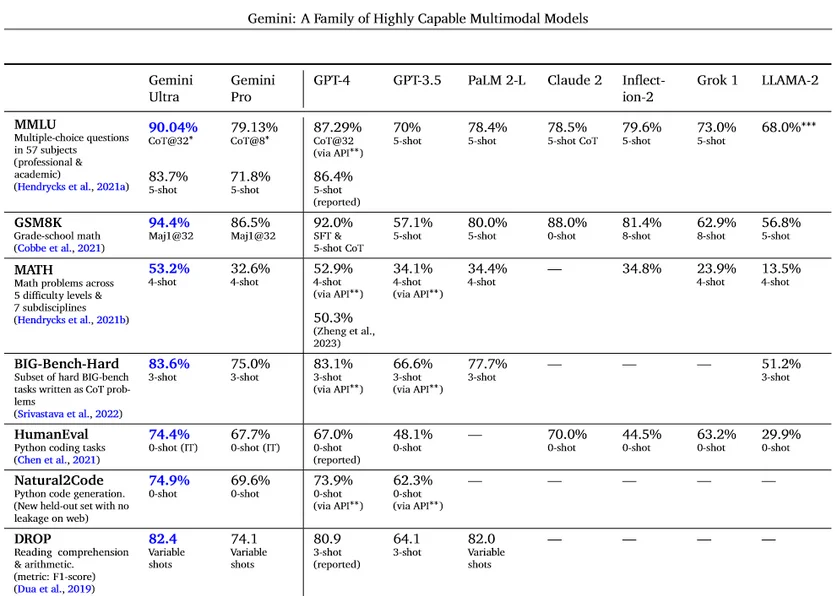

Criticism is also directed at Google’s benchmark data. Gemini Ultra, the largest model in the family, asserts superiority over GPT-4 in various benchmarks. While this is generally accurate, the cited figures seem strategically chosen to portray Gemini in the most favorable light.

Google employed distinct methodologies for performance measurement, diverging from others in the field. The manner in which a user prompts an AI model can significantly impact its performance, and meaningful comparisons arise only when employing the same prompt strategy.

For instance, GPT-4’s performance on the massive multitask language understanding (MMLU) benchmark utilized a few-shot prompting method, where asking a question without context is termed “zero-shot” prompting, and providing a few examples constitutes “few-shot” prompting. On the other hand, Google’s Gemini was evaluated using a chain-of-reasoning method, a distinction noted by Richard Davies, Lead Artificial Intelligence Engineer at Guildhawk. “It’s not a fair comparison.”

While Google’s paper on Gemini presents a variety of comparisons, its marketing tactics selectively highlight different strategies to enhance results. Furthermore, the focus is solely on Gemini Ultra, which is not yet accessible to the public. The currently available Gemini Pro, with less impressive results, contrasts with the highlighted achievements.

The issues surrounding the presentation of Gemini have somewhat overshadowed its announcement. However, if one looks beyond the flawed marketing, Gemini stands out as an impressive achievement.

Being multimodal sets Gemini apart, enabling it to reason across various mediums such as text, images, audio, code, and other forms of media. While this characteristic is not unique to Gemini, many multimodal models are either not publicly accessible, challenging to use, or designed for specific tasks. This has allowed OpenAI’s GPT-4 to maintain dominance in the field.

Emma Matthies expresses her anticipation, stating, “At the very least, I am looking forward to there being a strong alternative and close competitor to GPT-4 and the new GPT-4 vision model. Because currently, there just isn’t anything in the same class.”

Gemini Ultra’s impressive benchmark results show a small yet significant edge over GPT-4

Meanwhile, Davies finds Gemini’s benchmark performance intriguing, acknowledging the cherry-picking involved yet highlighting a substantial improvement in several comparable scenarios.

He notes, “It’s about a four percent improvement [in MMLU] from GPT-4’s 86.4 percent to Gemini’s 90 percent. But in terms of how much actual error is reduced, it’s reduced by more than 20 percent… that’s quite a lot.” Even slight reductions in error carry significant implications when models handle millions of requests daily.

The future of Gemini remains uncertain, hinging on two key unknowns: the release date of Gemini Ultra and OpenAI’s GPT-5. While users can currently explore Gemini Pro, its larger counterpart is slated for release in 2024. The swift evolution of AI introduces unpredictability into how Ultra will perform upon arrival, providing OpenAI with ample time to counter with a new model or a moderately enhanced version of GPT-4.

Take the first step towards success and fill out the form below to get in touch with our team for a free consultation and cost estimation. Let’s shape the future of your business together!